diff --git a/README.md b/README.md

index 9495dd7..22270ef 100644

--- a/README.md

+++ b/README.md

@@ -9,10 +9,8 @@

# lihang_book_algorithm

-

-哇!看到有人star这个项目了

-本来打算把这个项目当成一个备份的

-但既然有人star了,那么就要好好对待啦!!!

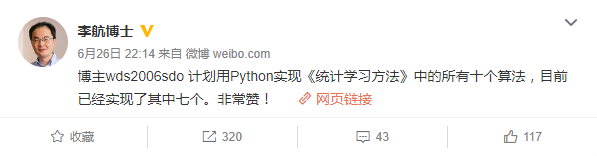

+被李航老师肯定啦!!开心!

+

## 简介

我这里不介绍任何机器学习算法的原理,只是将《统计学习方法》中每一章的算法用我自己的方式实现一遍。

@@ -55,10 +53,13 @@

纯Python代码:[AdaBoost/adaboost.py](https://github.com/WenDesi/lihang_book_algorithm/blob/master/AdaBoost/adaboost.py)

Python C++代码:[AdaBoost/adaboost_cpp.py](https://github.com/WenDesi/lihang_book_algorithm/blob/master/AdaBoost/adaboost_cpp.py),[AdaBoost/Sign/Sign/sign.h](https://github.com/WenDesi/lihang_book_algorithm/blob/master/AdaBoost/Sign/Sign/sign.h),[AdaBoost/Sign/Sign/sign.cpp](https://github.com/WenDesi/lihang_book_algorithm/blob/master/AdaBoost/Sign/Sign/sign.cpp)

-### 第九章 EM算法及其推广

-正在施工中...

+### 第十章 隐马尔科夫模型

+博客:[李航《统计学习方法》第十章——用Python实现隐马尔科夫模型](http://blog.csdn.net/wds2006sdo/article/details/75212599)

+

代码:[hmm/hmm.py](https://github.com/WenDesi/lihang_book_algorithm/blob/master/hmm/hmm.py)

+

## 额外章节

+

###softmax分类器

博客:[python 实现 softmax分类器(MNIST数据集)](http://blog.csdn.net/wds2006sdo/article/details/53699778)

代码:[softmax/softmax.py](https://github.com/WenDesi/lihang_book_algorithm/blob/master/softmax/softmax.py)

diff --git a/hmm/hmm.py b/hmm/hmm.py

new file mode 100644

index 0000000..2894f6a

--- /dev/null

+++ b/hmm/hmm.py

@@ -0,0 +1,238 @@

+# encoding=utf8

+

+import numpy as np

+import csv

+

+class HMM(object):

+ def __init__(self,N,M):

+ self.A = np.zeros((N,N)) # 状态转移概率矩阵

+ self.B = np.zeros((N,M)) # 观测概率矩阵

+ self.Pi = np.array([1.0/N]*N) # 初始状态概率矩阵

+

+ self.N = N # 可能的状态数

+ self.M = M # 可能的观测数

+

+ def cal_probality(self, O):

+ self.T = len(O)

+ self.O = O

+

+ self.forward()

+ return sum(self.alpha[self.T-1])

+

+ def forward(self):

+ """

+ 前向算法

+ """

+ self.alpha = np.zeros((self.T,self.N))

+

+ # 公式 10.15

+ for i in range(self.N):

+ self.alpha[0][i] = self.Pi[i]*self.B[i][self.O[0]]

+

+ # 公式10.16

+ for t in range(1,self.T):

+ for i in range(self.N):

+ sum = 0

+ for j in range(self.N):

+ sum += self.alpha[t-1][j]*self.A[j][i]

+ self.alpha[t][i] = sum * self.B[i][self.O[t]]

+

+ def backward(self):

+ """

+ 后向算法

+ """

+ self.beta = np.zeros((self.T,self.N))

+

+ # 公式10.19

+ for i in range(self.N):

+ self.beta[self.T-1][i] = 1

+

+ # 公式10.20

+ for t in range(self.T-2,-1,-1):

+ for i in range(self.N):

+ for j in range(self.N):

+ self.beta[t][i] += self.A[i][j]*self.B[j][self.O[t+1]]*self.beta[t+1][j]

+

+ def cal_gamma(self, i, t):

+ """

+ 公式 10.24

+ """

+ numerator = self.alpha[t][i]*self.beta[t][i]

+ denominator = 0

+

+ for j in range(self.N):

+ denominator += self.alpha[t][j]*self.beta[t][j]

+

+ return numerator/denominator

+

+ def cal_ksi(self, i, j, t):

+ """

+ 公式 10.26

+ """

+

+ numerator = self.alpha[t][i]*self.A[i][j]*self.B[j][self.O[t+1]]*self.beta[t+1][j]

+ denominator = 0

+

+ for i in range(self.N):

+ for j in range(self.N):

+ denominator += self.alpha[t][i]*self.A[i][j]*self.B[j][self.O[t+1]]*self.beta[t+1][j]

+

+ return numerator/denominator

+

+ def init(self):

+ """

+ 随机生成 A,B,Pi

+ 并保证每行相加等于 1

+ """

+ import random

+ for i in range(self.N):

+ randomlist = [random.randint(0,100) for t in range(self.N)]

+ Sum = sum(randomlist)

+ for j in range(self.N):

+ self.A[i][j] = randomlist[j]/Sum

+

+ for i in range(self.N):

+ randomlist = [random.randint(0,100) for t in range(self.M)]

+ Sum = sum(randomlist)

+ for j in range(self.M):

+ self.B[i][j] = randomlist[j]/Sum

+

+ def train(self, O, MaxSteps = 100):

+ self.T = len(O)

+ self.O = O

+

+ # 初始化

+ self.init()

+

+ step = 0

+ # 递推

+ while step