Abstract

Transient changes in the firing of midbrain dopamine neurons have been closely tied to the unidimensional value-based prediction error contained in temporal difference reinforcement learning models. However, whereas an abundance of work has now shown how well dopamine responses conform to the predictions of this hypothesis, far fewer studies have challenged its implicit assumption that dopamine is not involved in learning value-neutral features of reward. Here, we review studies in rats and humans that put this assumption to the test, and which suggest that dopamine transients provide a much richer signal that incorporates information that goes beyond integrated value.

This is a preview of subscription content, access via your institution

Access options

Access to this article via ICE Institution of Civil Engineers is not available.

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$189.00 per year

only $15.75 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Mirenowicz, J. & Schultz, W. Importance of unpredictability for reward responses in primate dopamine neurons. J. Neurophysiol. 72, 1024–1027 (1994).

Schultz, W. Getting formal with dopamine and reward. Neuron 36, 241–263 (2002).

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction (MIT Press, 2018).

Rescorla, R. A. & Wagner, A. R. in Classical Conditioning II: Current Research and Theory (eds Black, A. H. & Prokesy, W. F.) 64–99 (Appleton-Century-Crofts, 1972).

Sutton, R. S. & Barto, A. G. Toward a modern theory of adaptive networks: expectation and prediction. Psychol. Rev. 88, 135–170 (1981).

Dayan, P. Improving generalization for temporal difference learning: the successor representation. Neural Comput. 5, 613–624 (1993).

Lak, A., Stauffer, W. R. & Schultz, W. Dopamine prediction error responses integrate subjective value from different reward dimensions. Proc. Natl Acad. Sci. USA 111, 2343–2348 (2014).

Tobler, P. N., Fiorillo, C. D. & Schultz, W. Adaptive coding of reward value by dopamine neurons. Science 307, 1642–1645 (2005).

Fiorillo, C. D., Tobler, P. N. & Schultz, W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science 299, 1898–1902 (2003).

Roesch, M. R., Calu, D. J. & Schoenbaum, G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat. Neurosci. 10, 1615–1624 (2007).

Schultz, W. Dopamine reward prediction-error signalling: a two-component response. Nat. Rev. Neurosci. 17, 183–195 (2016).

Watabe-Uchida, M., Eshel, N. & Uchida, N. Neural circuitry of reward prediction error. Annu. Rev. Neurosci. 40, 373–394 (2017).

O’Doherty, J. P., Dayan, P., Friston, K., Critchley, H. & Dolan, R. J. Temporal difference models and reward-related learning in the human brain. Neuron 38, 329–337 (2003).

D’Ardenne, K., McClure, S. M., Nystrom, L. E. & Cohen, J. D. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science 319, 1264–1267 (2008).

Rutledge, R. B., Dean, M., Caplin, A. & Glimcher, P. W. Testing the reward prediction error hypothesis with an axiomatic model. J. Neurosci. 30, 13525–13536 (2010).

Haber, S. N., Fudge, J. L. & McFarland, N. R. Striatonigrostriatal pathways in primates form an ascending spiral from the shell to the dorsolateral striatum. J. Neurosci. 20, 2369–2382 (2000).

Fallon, J. H. & Moore, R. Y. Catecholamine innervation of the basal forebrain. IV. Topography of the dopamine projection to the basal forebrain and neostriatum. J. Comp. Neurol. 180, 545–580, (1978).

Bjorklund, A. & Dunnett, S. B. Dopamine neuron systems in the brain: an update. Trends Neurosci. 30, 194–202 (2007).

Pessiglione, M., Seymour, B., Flandin, G., Dolan, R. J. & Frith, C. D. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature 442, 1042–1045 (2006).

Knutson, B. et al. Amphetamine modulates human incentive processing. Neuron 43, 261–269 (2004).

Schultz, W., Dayan, P. & Montague, P. R. A neural substrate for prediction and reward. Science 275, 1593–1599 (1997).

Glimcher, P. W. Understanding dopamine and reinforcement learning: the dopamine reward prediction error hypothesis. Proc. Natl Acad. Sci. USA 108, 15647–15654 (2011).

Kakade, S. & Dayan, P. Dopamine: generalization and bonuses. Neural Netw. 15, 549–559 (2002).

Starkweather, C. K. & Uchida, N. Dopamine signals as temporal difference errors: recent advances. Curr. Opin. Neurobiol. 67, 95–105 (2021).

Dabney, W. et al. A distributional code for value in dopamine-based reinforcement learning. Nature 577, 671–675 (2020).

Jeong, H. et al. Mesolimbic dopamine release conveys causal associations. Science 378, eabq6740 (2022).

Coddington, L. T., Lindo, S. E. & Dudman, J. T. Mesolimbic dopamine adapts the rate of learning from action. Nature 614, 294–302 (2023).

Kutlu, M. G. et al. Dopamine release in the nucleus accumbens core signals perceived saliency. Curr. Biol. 31, 4748–4761.e8 (2021).

Lee, R. S., Sagiv, Y., Engelhard, B., Witten, I. B. & Daw, N. D. A feature-specific prediction error model explains dopaminergic heterogeneity. Nat. Neurosci. 27, 1574–1586 (2024).

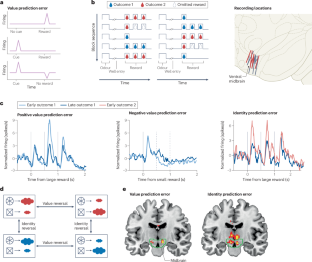

Takahashi, Y. K. et al. Dopamine neurons respond to errors in the prediction of sensory features of expected rewards. Neuron 95, 1395–1405.e3 (2017).

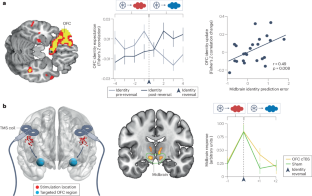

Howard, J. D. & Kahnt, T. Identity prediction errors in the human midbrain update reward-identity expectations in the orbitofrontal cortex. Nat. Commun. 9, 1611 (2018).

Boorman, E. D., Rajendran, V. G., O’Reilly, J. X. & Behrens, T. E. Two anatomically and computationally distinct learning signals predict changes to stimulus-outcome associations in hippocampus. Neuron 89, 1343–1354 (2016).

Suarez, J. A., Howard, J. D., Schoenbaum, G. & Kahnt, T. Sensory prediction errors in the human midbrain signal identity violations independent of perceptual distance. eLife 8, e43962 (2019).

Witkowski, P. P., Park, S. A. & Boorman, E. D. Neural mechanisms of credit assignment for inferred relationships in a structured world. Neuron 110, 2680–2690.e9 (2022).

Liu, Q. et al. Midbrain signaling of identity prediction errors depends on orbitofrontal cortex networks. Nat. Commun. 15, 1704 (2024).

Millidge, B., Song, Y., Lak, A., Walton, M. E. & Bogacz, R. Reward bases: a simple mechanism for adaptive acquisition of multiple reward types. PLoS Comput. Biol. 20, e1012580 (2024).

Papageorgiou, G. K., Baudonnat, M., Cucca, F. & Walton, M. E. Mesolimbic dopamine encodes prediction errors in a state-dependent manner. Cell Rep. 15, 221–228 (2016).

Kim, H. R. et al. A unified framework for dopamine signals across timescales. Cell 183, 1600–1616 (2020).

Ogasawara, T. et al. A primate temporal cortex — zona incerta pathway for novelty seeking. Nat. Neurosci. 25, 50–60 (2022).

Akam, T. & Walton, M. E. What is dopamine doing in model-based reinforcement learning? Curr. Opin. Behav. Sci. 38, 74–82 (2021).

Bromberg-Martin, E. S., Matsumoto, M. & Hikosaka, O. Dopamine in motivational control: rewarding, aversive, and alerting. Neuron 68, 815–834 (2010).

Pearce, J. M. & Hall, G. A model for Pavlovian learning: variations in the effectiveness of conditioned but not of unconditioned stimuli. Psychol. Rev. 87, 532–552 (1980).

Pearce et al. in Quantitative Analyses of Behavior Vol. 3 (eds Commons, M. L., Herrnstein, R. J. & Wagner, A. R.) 241–255 (Ballinger, 1982).

Stalnaker, T. A. et al. Dopamine neuron ensembles signal the content of sensory prediction errors. eLife 8, e49315 (2019).

Howard, J. D., Edmonds, D., Schoenbaum, G. & Kahnt, T. Distributed midbrain responses signal the content of positive identity prediction errors. Curr. Biol. 34, 241–4240.e4 (2024).

Garr, E. et al. Mesostriatal dopamine is sensitive to specific cue-reward contingencies. Sci. Adv. https://doi.org/10.1126/sciadv.adn4203 (2023).

Steinberg, E. E. et al. A causal link between prediction errors, dopamine neurons and learning. Nat. Neurosci. 16, 966–973 (2013).

Kamin, L. J. "Attention-like" processes in classical conditioning. In Miami Symposium on the Prediction of Behavior, 1967: Aversive Stimulation (ed. Jones, M. R.) 9–31 (Univ. of Miami Press, 1968).

Keiflin, R., Pribut, H. J., Shah, N. B. & Janak, P. H. Ventral tegmental dopamine neurons participate in reward identity predictions. Curr. Biol. 29, 93–103.e3 (2019).

Holland, P. C. & Rescorla, R. A. The effects of two ways of devaluing the unconditioned stimulus after first and second-order appetitive conditioning. J. Exp. Psychol. Anim. Behav. Process. 1, 355–363 (1975).

Howard, J. D., Gottfried, J. A., Tobler, P. N. & Kahnt, T. Identity-specific coding of future rewards in the human orbitofrontal cortex. Proc. Natl Acad. Sci. USA 112, 5195–5200 (2015).

Stalnaker, T. A. et al. Orbitofrontal neurons infer the value and identity of predicted outcomes. Nat. Commun. 5, 3926 (2014).

Stoll, F. M. & Rudebeck, P. H. Preferences reveal dissociable encoding across prefrontal-limbic circuits. Neuron 112, 2241–2256.e8 (2024).

Burke, K. A., Franz, T. M., Miller, D. N. & Schoenbaum, G. The role of the orbitofrontal cortex in the pursuit of happiness and more specific rewards. Nature 454, 340–344 (2008).

Howard, J. D. et al. Targeted stimulation of human orbitofrontal networks disrupts outcome-guided behavior. Curr. Biol. 30, 490–498.e4 (2020).

Rudebeck, P. H., Saunders, R. C., Prescott, A. T., Chau, L. S. & Murray, E. A. Prefrontal mechanisms of behavioral flexibility, emotion regulation and value updating. Nat. Neurosci. 16, 1140–1145 (2013).

Sias, A. C. et al. A bidirectional corticoamygdala circuit for the encoding and retrieval of detailed reward memories. eLife 10, e68617 (2021).

Ostlund, S. B. & Balleine, B. W. Orbitofrontal cortex mediates outcome encoding in Pavlovian but not instrumental learning. J. Neurosci. 27, 4819–4825 (2007).

McDannald, M. A., Saddoris, M. P., Gallagher, M. & Holland, P. C. Lesions of orbitofrontal cortex impair rats’ differential outcome expectancy learning but not conditioned stimulus-potentiated feeding. J. Neurosci. 25, 4626–4632 (2005).

Sias, A. C. et al. Dopamine projections to the basolateral amygdala drive the encoding of identity-specific reward memories. Nat. Neurosci. 27, 728–736 (2024).

Brogden, W. J. Sensory pre-conditioning. J. Exp. Psychol. 25, 323–332 (1939).

Jones, J. L. et al. Orbitofrontal cortex supports behavior and learning using inferred but not cached values. Science 338, 953–956 (2012).

Wang, F., Schoenbaum, G. & Kahnt, T. Interactions between human orbitofrontal cortex and hippocampus support model-based inference. PLoS Biol. 18, e3000578 (2020).

Sharpe, M. J. et al. Dopamine transients are sufficient and necessary for acquisition of model-based associations. Nat. Neurosci. 20, 735–742 (2017).

Esmoris-Arranz, F. J., Miller, R. R. & Matute, H. Blocking of subsequent and antecedent events. J. Exp. Psychol. Anim. Behav. Process. 23, 145–156 (1997).

Kamin, L. J. in Punishment and Aversive Behavior (eds Campbell, B. A. & Church, R. M.) 242–259 (Appleton-Century-Crofts, 1969).

Mackintosh, N. J. A theory of attention: variations in the associability of stimuli with reinforcement. Psychol. Rev. 82, 276–298 (1975).

Hart, E. E., Sharpe, M. J., Gardner, M. P. & Schoenbaum, G. Responding to preconditioned cues is devaluation sensitive and requires orbitofrontal cortex during cue-cue learning. eLife 9, e59998 (2020).

Sharpe, M. J., Batchelor, H. M. & Schoenbaum, G. Preconditioned cues have no value. eLife 6, e28362 (2017).

Wong, F. S., Westbrook, R. F. & Holmes, N. M. ‘Online’ integration of sensory and fear memories in the rat medial temporal lobe. eLife 8, e47085 (2019).

Costa, K. M., Raheja, N., Mirani, J., Sercander, C. & Schoenbaum, G. Striatal dopamine release reflects a domain-general prediction error. Preprint at bioRxiv https://doi.org/10.1101/2023.08.19.553959 (2023).

Moser, E. I., Kropff, E. & Moser, M. B. Place cells, grid cells, and the brain’s spatial representation system. Annu. Rev. Neurosci. 31, 69–89 (2008).

Witten, I. B. et al. Recombinase-driver rat lines: tools, techniques, and optogenetic application to dopamine-mediated reinforcement. Neuron 72, 721–733 (2011).

Ilango, S. et al. Similar roles of substantia nigra and ventral tegmental dopamine neurons in reward and aversion. J. Neurosci. 34, 817–822 (2014).

Covey, D. P. & Cheer, J. F. Accumbal dopamine release tracks the expectation of dopamine neuron-mediated reinforcement. Cell Rep. 27, 481–490 (2019).

Wolff, A. R. & Saunders, B. T. Sensory cues potentiate VTA dopamine mediated reinforcement. eNeuro 11, ENEURO.0421-0423.2024 (2024).

Chang, C. Y. et al. Brief optogenetic inhibition of VTA dopamine neurons mimics the effects of endogenous negative prediction errors during Pavlovian over-expectation. Nat. Neurosci. 19, 111–116 (2016).

Chang, C. Y., Gardner, M., Di Tillio, M. G. & Schoenbaum, G. Optogenetic blockade of dopamine transients prevents learning induced by changes in reward features. Curr. Biol. 27, 3480–3486 (2017).

Chang, C. Y., Gardner, M. P. H., Conroy, J. S., Whitaker, L. R. & Schoenbaum, G. Brief, but not prolonged, pauses in the firing of midbrain dopamine neurons are sufficient to produce a conditioned inhibitor. J. Neurosci. 38, 8822–8830 (2018).

Millard, S. J. et al. Cognitive representations of intracranial self-stimulation of midbrain dopamine neurons depend on stimulation frequency. Nat. Neurosci. 27, 1253–1259 (2024).

Takahashi, Y. K. et al. Dopaminergic prediction errors in the ventral tegmental area reflect a multithreaded predictive model. Nat. Neurosci. 26, 830–839 (2023).

Gardner, M. P. H., Schoenbaum, G. & Gershman, S. J. Rethinking dopamine as generalized prediction error. Proc. Biol. Sci. 285, 20181645 (2018).

Gershman, S. J. The successor representation: its computational logic and neural substrates. J. Neurosci. 38, 7193–7200 (2018).

Langdon, A. J., Sharpe, M. J., Schoenbaum, G. & Niv, Y. Model-based predictions for dopamine. Curr. Opin. Neurobiol. 49, 1–7 (2018).

German, D. C., Schlusselberg, D. S. & Woodward, D. J. Three-dimensional computer reconstruction of midbrain dopaminergic neuronal populations: from mouse to man. J. Neural Transm. 57, 243–254 (1983).

Kahnt, T. in Encyclopedia of the Human Brain 2nd edn (ed. Grafman, J. H.) 387–400 (Elsevier, 2025).

Tegelbeckers, J., Porter, D. B., Voss, J. L., Schoenbaum, G. & Kahnt, T. Lateral orbitofrontal cortex integrates predictive information across multiple cues to guide behavior. Curr. Biol. 33, 4496–4504.e5 (2023).

Acknowledgements

This work was supported by the Intramural Research Program at the National Institute on Drug Abuse. The opinions expressed in this article are the authors’ own and do not reflect the view of the National Institutes of Health, Department of Health and Human Services. The authors have no conflicts of interest to report.

Author information

Authors and Affiliations

Contributions

The authors contributed equally to all aspects of the article.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Reviews Neuroscience thanks Naoshige Uchida, who co-reviewed with Malcolm Campbell, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kahnt, T., Schoenbaum, G. The curious case of dopaminergic prediction errors and learning associative information beyond value. Nat. Rev. Neurosci. 26, 169–178 (2025). https://doi.org/10.1038/s41583-024-00898-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41583-024-00898-8